The latest blog entries are shown below. All entries are found under all journal entries.

I wanted to migrate my Home Assistant Operating System (HAOS) instance from a Raspberry Pi to an old PC that was apparently older than UEFI. HAOS only supports UEFI boot however. I had read about some community efforts to install Grub for BIOS on top of HAOS. This is not too difficult to do. What seems difficult is making this configuration stable across HAOS updates so that they, first of all work at all with the A/B update mechanism, and secondly, remain working even as HAOS changes across major releases. I took a different path, which is to make the PC quack like UEFI by inserting another bootloader stage before HAOS: Clover EFI Bootloader.

Clover is primarily used to make a PC look sufficiently like a Mac so that macOS can be installed and run. macOS has many dependencies on EFI which need to be emulated by Clover. Linux in contrast is much easier to boot, so most parts of Clover are not necessary for this hack and its configuration will be comparatively simple.

I eventually succeeded in booting a mostly unmodified HAOS Generic x86-64 in UEFI mode. However, I quickly realised that my intended use-case wouldn't work, which was to use the GPU in this old box for hardware accelerated video decoding and analytics in Frigate NVR. This is because HAOS doesn't include drivers for NVIDIA GPUs. (The GPU is much newer than the motherboard.)

So I scrapped that idea for now and thus cannot say whether this method works as intended long-term across updates. The installation succeeded though so I'm putting this out there as a "how I did it", not a "how to-guide".

All the steps are done from a Linux live CD (or USB etc.). I used Debian Live but any distro containing common system utilities should work.

Boot sequence with Clover

From the Clover Manual (PDF):

BIOS→Master Boot Record→Partition/Volume Boot Record→boot→CLOVERX64.efi→OSLoader

where the executable code in Master Boot Record and Partition/Volume Boot Record are parts of Clover, boot is an executable file in the EFI System Partition, also from Clover, and OSLoader is Grub for UEFI as shipped by HAOS.

Specifically, OSLoader will be /efi/boot/bootx64.efi from the ESP on the HAOS image.

The manual is detailed but somewhat difficult to read. The ArchWiki entry on Clover gives an overview that is easier to read.

Clover requires that the EFI System Partition is FAT32 and won't accept FAT12 or FAT16.

This is allegedly UEFI specification compliant (although I find that the UEFI specification is not very firm on this point), but many UEFI implementations are apparently fine with a FAT16 ESP.

The ESP in HAOS is formatted as FAT16.

I tried it and it didn't work.

I had to reformat it as FAT32.

Unfortunately, the ESP volume is too small to be valid FAT32 according to some sources (like mkfs.fat).

The HAOS ESP is exactly 32 MiB but the smallest valid FAT32 volume is a tad larger than 32 MiB.

How much larger depends on who you ask, and maybe which parameters are used during formatting (sectors per cluster etc.).

I guess it depends on which historic FAT32 implementations you want to be compliant with.

I haven't bothered to figure out the (most) correct answer.

What this means is that the ESP needs to be enlarged and subsequent partitions shifted over a bit. I made it 100 MiB. I didn't try formatting the ESP in-place as a 32 MiB FAT32 volume and see if Clover would accept it. If it does, then many of these steps are unnecessary. Again, this is how I did it, but if I did this again I would start by just reformatting the existing ESP.

Partitioning

I made a new GUID Partition Table with a 100 MiB ESP first and then eight more partitions corresponding to the layout on the HAOS image.

The partition layout is described in the Home Assistant Developer Docs, section Partitioning.

This was a mistake as HAOS requires at a minimum the partition unique GUID of the system partition to match the expected value from the image, as well as the filesystem label on the ESP to match.

Otherwise the HAOS boot sequence would fail at various stages due to not finding all its volumes.

I later had to fix this up using gdisk to copy the unique GUID from each partition on the image to the corresponding partition on my target disk, as well as the partition name for good measure.

Use gdisk command i to "show detailed information on a partition" such as the unique GUID and partition name on the image.

Use command c to "change a partition's name" on the target partition.

Use command x ("extra functionality (experts only)"), then command c to "change partition GUID" on the target partition.

Use mkfs.fat -F 32 to specifically make a FAT32 filesystem on the ESP, overriding the automatic choice between FAT12/16/32 that mkfs.fat would otherwise make.

fatlabel can be used to change the filesystem label on the ESP FAT32 filesystem.

Clover installation

Loop mount the Clover ISO at /clover_iso:

# mkdir /clover_iso

# mount -o loop /media/user/disk/Clover-5162-X64.iso /clover_iso

The commands from ArchWiki should generally apply, but these are the ones that I used.

Replace sdb with the target disk, the first volume of which will be the EFI System Partition.

Install boot0 and boot1 like this:

# cd /tmp

# cp /clover_iso/usr/standalone/i386/boot0af .

# cp /clover_iso/usr/standalone/i386/boot1f32alt .

# dd if=/dev/sdb count=1 bs=512 of=origMBR

# cp origMBR newMBR

# dd if=boot0af of=newMBR bs=1 count=440 conv=notrunc

# dd if=newMBR of=/dev/sdb count=1 bs=512

# dd if=/dev/sdb1 count=1 bs=512 of=origbs

# cp boot1f32alt newbs

# dd if=origbs of=newbs skip=3 seek=3 bs=1 count=87 conv=notrunc

# dd if=newbs of=/dev/sdb1 count=1 bs=512

Then copy the required files into the ESP filesystem:

# mkdir /esp

# mount /dev/sdb1 /esp

# cp -a /clover_iso/efi/clover/ /esp/EFI/

# cp /clover_iso/usr/standalone/i386/x64/boot6 /esp/boot

Don't worry about warnings from cp about not being able to copy metadata such as file ownership.

This is caused by using -a on a filesystem not supporting such attributes (FAT32).

Don't copy bootx64.efi from Clover.

This executable is only used when booting Clover from UEFI.

Which boot0 to use?

I don't think it matters too much actually.

ArchWiki suggests boot0ss which according to the Clover manual looks for a HFS+ partition signature.

If not found it falls back to the first FAT32 partition.

I used boot0af which checks the active partition first (I think this means the partition with the boot flag set).

If none, then it falls back to the EFI System Partition

These two variants should thus in practice both work in this case.

I think my ESP unintentionally does have the boot flag set, so the first check in boot0af would select it.

However, even if it didn't, then it should still be selected due to being the ESP.

Which boot1 to use?

Either boot1f32 or boot1f32alt is fine.

Both are for FAT32 volumes.

The difference is that the alt-version has a 2 second pause where it waits for user input to override which next stage to use.

HAOS installation

Loop mount partition 1 from the HAOS image and copy the entire /efi/boot from it onto the target ESP:

# losetup --show --find /media/user/disk/haos_generic-x86-64-15.2.img

/dev/loop1

# partprobe /dev/loop1

# mkdir /haos_esp

# mount /dev/loop1p1 /haos_esp

# cp -a /haos_esp/efi/boot /esp

/efi/boot contains the Grub UEFI executable and its configuration.

Copy HAOS partitions 2-9 onto the target disk using dd or whatever.

Configuring Clover

Clover will be configured to boot Grub without showing a menu. You may wish to pause here, reboot and verify that Clover with its default configuration starts and shows a GUI. If it does, HAOS Grub can be manually started by entering a UEFI shell and typing:

fs0:

cd efi\boot

bootx64.efi

If instead there is a blinking cursor and no more progress, that is a sign that the pre-loader "boot" cannot be found.

This happened to me when the ESP was formatted as FAT16.

The boot file was there, but the stage 1 bootloader (in the Partition Boot Record) probably couldn't read the filesystem at all.

If successful then reboot into the live CD, mount the target ESP at /haos_esp and continue.

Or skip the previous part and continue without testing if feeling lucky.

Replace the contents of /haos_esp/efi/clover/config.plist with the following:

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "https://www.apple.com/DTDs/PropertyList-1.0.dtd">

<plist version="1.0">

<dict>

<key>Boot</key>

<dict>

<key>DefaultVolume</key>

<string>hassos-boot</string>

<key>DefaultLoader</key>

<string>\efi\BOOT\bootx64.efi</string>

<key>Fast</key>

<true/>

</dict>

<key>GUI</key>

<dict>

<key>Custom</key>

<dict>

<key>Entries</key>

<array>

<dict>

<key>Hidden</key>

<false/>

<key>Disabled</key>

<false/>

<key>Volume</key>

<string>hassos-boot</string>

<key>Path</key>

<string>\efi\BOOT\bootx64.efi</string>

<key>Title</key>

<string>HAOS</string>

<key>Type</key>

<string>Linux</string>

</dict>

</array>

</dict>

</dict>

</dict>

</plist>

This is from the ArchWiki entry on Clover, section chainload systemd-boot, lightly edited.

Explanations:

- DefaultVolume and Volume identify the ESP volume, either based on its GUID or name. Not sure if name refers to the partition name or filesystem label. In the HAOS image they are the same, so best keep it that way.

- DefaultLoader and Path are the path to the EFI executable from the root of DefaultVolume/Volume respectively.

- Fast is the option that disables the Clover GUI.

- I don't know what Type (Linux) is used for, or if the false options actually need to be set as such.

Unmounting and rebooting

I always carefully unmount anything I mount on a live CD before rebooting. Generally, do the opposite steps in the opposite order compared to when setting up:

# umount /haos_esp

# umount /esp

# umount /clover_iso

# losetup -d /dev/loop1

After a reboot with the configuration from the previous section Clover should automatically boot HAOS Grub without showing a GUI.

Hardware details

Probably not of interest to anyone, but in case it is the old PC has these specs:

- Asus P7P55 LX

- Intel Core i5-760

- GeForce 960

I'm watching modern era Doctor Who (2005-present) for the second time. Doctor Who is a TV series that is dear to me but at the same time somewhat uneven so for the benefit of future me and hopefully others I'm writing a short episode guide this rewatch.

I try to avoid spoilers as far as possible except when needed to justify my verdict or when spoiler warnings are well past their due date, such as which episodes have the Doctor reincarnate. Double episodes are combined into one review.

These are the entries published so far:

2025 update: This never worked reliably. Time Machine backups would only work a few times, then it would no longer recognize the destination. Deleting the backup and starting over helped, but only temporarily. In the end I gave up.

I recently upgraded Debian on my home server by means of reinstallation. Before this I had Netatalk set up to serve a directory over AFP to be used as the Time Machine backup target for the girlfriend's MacBook. In the beginning Time Machine only supported AFP for remote backups but since quite a few years now it can also use the SMB protocol version 3.

I figured that by now the relevant parts to support that server side ought to have been implemented in Samba, trickled down the distribution chain and with some luck ended up packaged in the latest Debian. That turned out to be correct and what follows is a summary of my configuration and findings during researching the correct settings.

Base configuration

You can find any number of online guides on how to set up a decent base configuration of Samba. The stock smb.conf shipped with Debian is pretty cluttered but contains mostly reasonable default values. These are the deviations from it that I made, in the [global] section:

interfaces = 127.0.0.0/8 br0

bind interfaces only = yes

I have multiple network interfaces for virtualization purposes and only

want Samba shares on one of them (br0). The man page for

smb.conf(5)

tells you that the loopback address must be listed in interfaces:

If bind interfaces only is set and the network address 127.0.0.1 is not added to the interfaces parameter list smbpasswd(8) may not work as expected due to the reasons covered below.

Microsoft has discouraged the use of the original SMB protocol for approximately 100 years now. I don't plan to use any devices that do not support SMB 3.0 so let's set that as the minimum version required:

server min protocol = SMB3_00

I then removed all the default shares (home directories and printers)

and added a few of my own. For each share I set a comment, path and

read only. I'm not sure whether the comment is necessary. The rest of

the parameters should be fine at their default values. I have given up

trying to get guest access to work and now have user accounts everywhere

instead.

User accounts

Simply run smbpasswd -a <username> for each user. The username should

already exist as a Unix user account (create it with useradd

otherwise). This allows the use of Unix filesystem permissions also for

SMB shares to make things simple.

Additional configuration requirements

We are not done yet. For Time Machine to accept an SMB share as a valid target a few Apple specific protocol extensions need to be enabled. These are needed to support alternate data streams (e.g. Apple resource forks) and are implemented in the Samba VFS module fruit. I recommend that you read through the entirety of the man page vfs_fruit(8).

Mind especially the following paragraph of the description:

Be careful when mixing shares with and without vfs_fruit. OS X clients negotiate SMB2 AAPL protocol extensions on the first tcon, so mixing shares with and without fruit will globally disable AAPL if the first tcon is without fruit.

For this reason I recommend placing some of the fruit configuration in the [global] section so that it affects all shares.

[global] section

To begin with the relevant VFS modules need to be enabled using the vfs

objects parameter. fruit is the module that implements the protocol

features. It depends on streams_xattr for storing the alternate data

streams in extended attributes, so that also needs to be loaded.

As an inheritance from Windows some characters (such as the colon :)

are illegal in SMB, while they are allowed on both macOS and Linux. This

means that in order to transfer them over SMB they need to be encoded

somehow, and Time Machine uses a private Unicode range for this. This is

totally fine, but can (allegedly) look a bit weird if listing the files

on the server. So, optionally the catia module can be used to reverse

this encoding server side.

The Samba wiki page Configure Samba to Work Better with Mac OS X tells me that the module order is important and that the correct order for these three modules is this:

vfs objects = catia fruit streams_xattr

Next, the fruit module needs to be told where to store the alternate data streams. The default storage is a file, which is slow but works with every filesystem. It can also use xattrs, but only with filesystems that support very large xattrs such as ZFS, the greatest filesystem that ever was or will be. As it happens, ZFS is what I use and thus I add:

fruit:resource = xattr

To tell fruit to reverse the encoding of the aforementioned illegal characters using the catia module, the following parameter must be set:

fruit:encoding = native

I also enabled fruit:copyfile which enables a feature that lets the

client request a server side file copy for increased performance. It is

not enabled by default and I cannot find a quotation why.

Per share configuration

The last part necessary is to mark a specific share as an allowed Time Machine target. This is done using the parameter

fruit:time machine = yes

Since Time Machine will fill the target with as many backups as there is space for before removing old ones it is a good idea to set a reasonable advertised limit. Note according to vfs_fruit(8) that this only works as intended when the share is used solely as a Time Machine target.

fruit:time machine max size = 1536G

While it is possible to place also these parameters in the [global] section, you probably don't want to allow all shares to be used as Time Machine destinations.

ZFS specific configuration

Extended attributes are enabled by default in ZFS (xattr=on). The default storage is directory based, which is compatible with very old (pre-OpenZFS) implementations. The last open source version of OpenSolaris (Nevada b137) as well as all OpenZFS versions as far as I am aware supports storing extended attributes as system attributes, which greatly increase their performance due to fewer disk accesses. To switch to system attributes:

zfs set xattr=sa <pool>/<filesystem>

Conclusion

That should be it! Restart smbd and nmbd (I never learned which service does what) to apply the configuration:

systemctl restart smbd nmbd

With a default Avahi configuration the configured volume should now appear as an available target in Time Machine.

It's been a while. I've recently migrated this site (back) to Ikiwiki. For the last few years this site was based on Grav. While the result was pretty enough, I found Grav to be a quite high maintenance CMS. I blame this mostly on the fact that Grav has decided to be a "stupid" PHP application. A stupid PHP applicaiton is installed and updated by visiting a website somewhere, manually downloading a ZIP archive and extracting it (on top of the old one).

Anyone who has used a Linux distribution knows that there is a better way to install and keep software up to date, and it is known as package management. PHP applications don't have to be stupid. It is perfectly possible to integrate them into the normal package management of any distribution which would make them as easy to update and maintain as any other package that is just "there" and just works.

Since I write here very rarely and irregularly, I've come to value low maintenance over most other benefits a CMS may have.

There are some other things I like about Ikiwiki:

Version control integration

Any CMS using flat files as storage can of course be version controlled. However, usually some degree of integration is desirable for smooth deployment. Ikiwiki has all this built in. For Grav I had to write a plugin, which I published here: grav-plugin-deploygit. I don't know if it still works or is still needed.

Static site generator

If I don't want to run it anymore, I can just disable and remove the Ikiwiki CGI. The web server would continue to serve the baked site, only the search function would stop working.

This also makes it very fast. Yes, a dynamic site server can also be fast, but a static one is fast by default.

Well-packaged in Debian

There is a list of all Ikiwiki instances on a system (/etc/ikiwiki/wikilist) which is used on package upgrades to refresh or rebuild everything as needed, making unattended upgrades possible and carefree. I don't think it is a coincidence that Ikiwiki's maintainers are or were Debian developers.

Domain-specific language with powerful primitives

Ikiwiki's directives like inline and map enable elaborate website

constructs and reduce the need for third-party plugins. I don't like

(third-party) plugins in general. Too often they don't work, and even if

they work today they may no longer work tomorrow as a result of being

developed separately from the base program.

On the other hand, making website features based on these primitives takes a lot of time and is bound to run into corner cases where they don't work like they should or the way you want. I have found that Ikiwiki is not an exception to this rule.

Issues with Ikiwiki

I alluded that this is not my first encounter with Ikiwiki. There was also a short period in early 2016 before Grav, and I had set up and used Ikiwiki on a job in 2014-2015. What made me go away was partly Ikiwiki's aforementioned corner cases where I couldn't make its directives work the way I wanted.

Another part was workflow issues. I mostly worked directly on the live site, pushing a commit for each update. This quickly made a mess of the revision history. The more sensible way of working with Ikiwiki is to have a local copy of the site that you can deploy to without committing to Git (alternatively, amending and force-pushing commits followed by a manual rebuild). That is essentially my current workflow. I might document it in more detail later, but it is similar to this.

Before 2016 I briefly used my home-cooked Python based CMS, which I wrote in 2013 and humbly named Writer's Choice. Its code is still available on Github.

Suppose, not entirely hypothetically, that you have a custom file type which is actually an existing type with a new file extension. Just for the sake of argument, let's say that the file type is Mozilla Archive Format (MAFF). This is actually a standard ZIP file which by its custom extension (.maff) is given a new purpose.

However, at least in Ubuntu 16.04 and earlier, there is no MIME type installed for MAFF. This causes programs like Nautilus that try to be smart about unknown file extensions to treat MAFF files like ZIP files, and suggest that they be opened in File-Roller. There doesn't seem to be a MIME type registered with IANA either. It is fairly easy to add a custom one though.

I'm writing this down mostly for my own reference. Since there is no standard MIME type for MAFF, I'll use application/x-maff which several sites on the internet (none authoritative though) suggests. First, put the following (adapting it to your needs) into a new file in ~/.local/share/mime/packages/something.xml. I don't think the file name is important, as long as it ends in .xml.

<?xml version="1.0" encoding="UTF-8"?>

<mime-info xmlns="http://www.freedesktop.org/standards/shared-mime-info">

<mime-type type="application/x-maff">

<glob pattern="*.maff"/>

<comment>Mozilla Archive Format File</comment>

</mime-type>

</mime-info>

Then run the following command to update the user specific MIME database.

$ update-mime-database .local/share/mime/

This will install the information above into

.local/share/mime/mimetype command:

$ mimetype 'Understanding the Xbox boot process_Flash structures - Hacking the Xbox.maff'

Understanding the Xbox boot process_Flash structures - Hacking the Xbox.maff: application/x-maff

Changes take effect in new Nautilus windows (in my testing), so close the existing windows and open a new one. Note that this is different from restarting Nautilus, as the process is usually still running in the background.

You could probably also do this change system-wide by placing the XML file in /usr/local/share/mime/packages/ (creating the directory if it doesn't exist) and updating the system-wide MIME database using:

$ sudo update-mime-database /usr/local/share/mime/

I prefer to keep such changes in my home directory though. My system is single-user anyway, and I reinstall the base operating system more often than I recreate my home directory.

In part 1 of this series I described the design of my HiFimeDIY T1 wooden case project and finished most of the fundamental parts of the chassis. In this segment I continue with the back panel where the connectors are mounted.

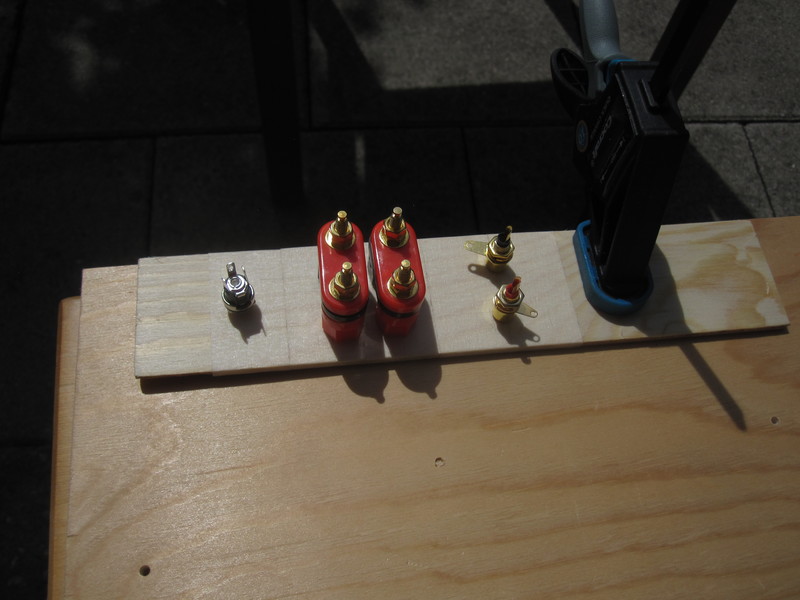

The connectors are, unsurprisingly, designed to be mounted in a thin metal chassis and therefore have quite short screw threads. The sides of the pre-made case I used are 6 mm solid wood, much too thick for the connectors. To mitigate this I opted to cut out a large part of the back and mount a thinner piece of plywood on top, which I could mount the connectors on.

I started by sawing a suitable piece of 3 mm plywood and marking the holes on masking tape. The masking tape was somewhat unnecessary but enabled me to make a bigger mess while marking without having to clean up the wood afterwards.

|

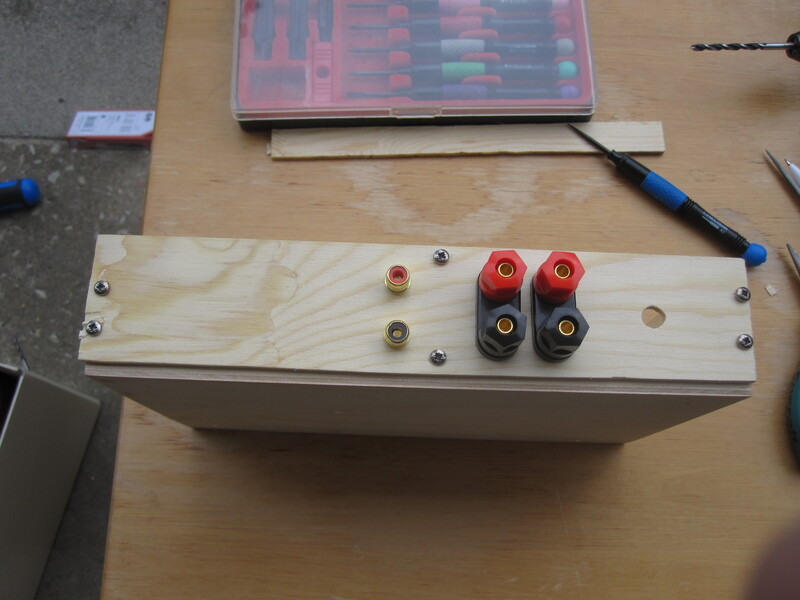

With the holes drilled and the connectors test-fitted it looked like this:

|

Then I had to make a rather large cut out in the box. I marked out a rectangle, drilled holes in the four corners and put the saw blade through one of the holes. The jigsaw worked great for this job.

I had to carve out some additional space for the large speaker connectors and the RCA input jacks. This is how the back panel ended up:

I mounted it on the case using sheet metal screws, since they were the only ones the hardware store offered in a suitable size. To avoid hitting the nails that hold the case together I had to place the screws somewhat asymmetrically.

The screw mounted rubber feet I also got from Clas Ohlson. These are actually intended for furniture but I found them to be excellent appliance feet as well.

As you can see in the top left corner I made the rookie mistake of not accounting for the appliance feet when I laid out the screw holes on the bottom of the case. Fortunately, the screws holding the PCB are countersunk so the overlap didn't cause any issues.

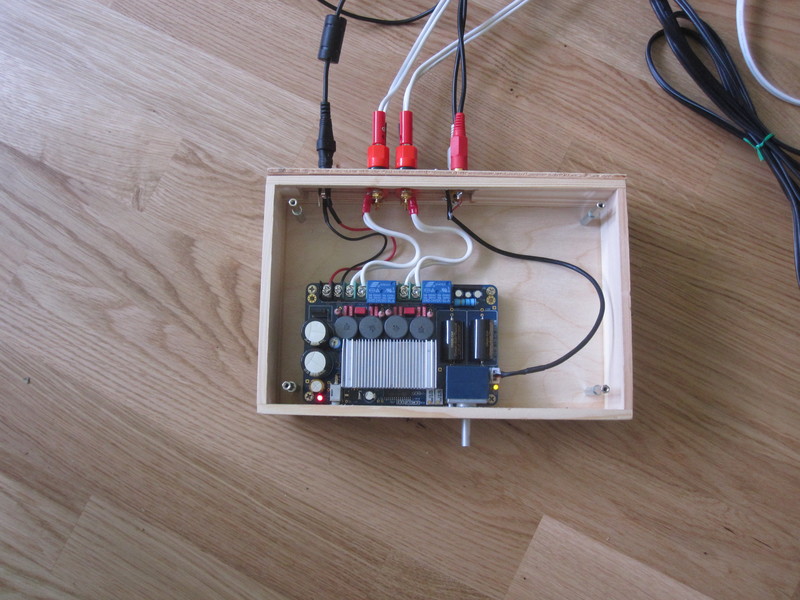

Finally, here is the finished amplifier with the cover off:

With the top cover on I used the amplifier for a few days to see if it worked and if I was satisfied with it. As the case would be completely shut I was a bit worried about heat dissipation. I borrowed an infrared thermometer from a friend to measure the amplifier temperature. After running it for an hour or so I found that the hottest parts on the board, the output inductors, reached about 50-55 °C. I think this is a bit on the hot side, considering that I might want the amplifier to remain functioning for tens of years if I like it. So I came up with a cooling solution, which I will describe in the next segment.

Update 2019: I never did write that followup segment. The cooling solution consisted of a grid of holes drilled in the top and bottom above the heatsink on the amplifier board. I'm not sure what difference it made in practice but in any case the amplifier has been working well for two and a half years now. I also oiled the outside of the wooden box to make it easier to keep clean. I've been quite happy with this project!

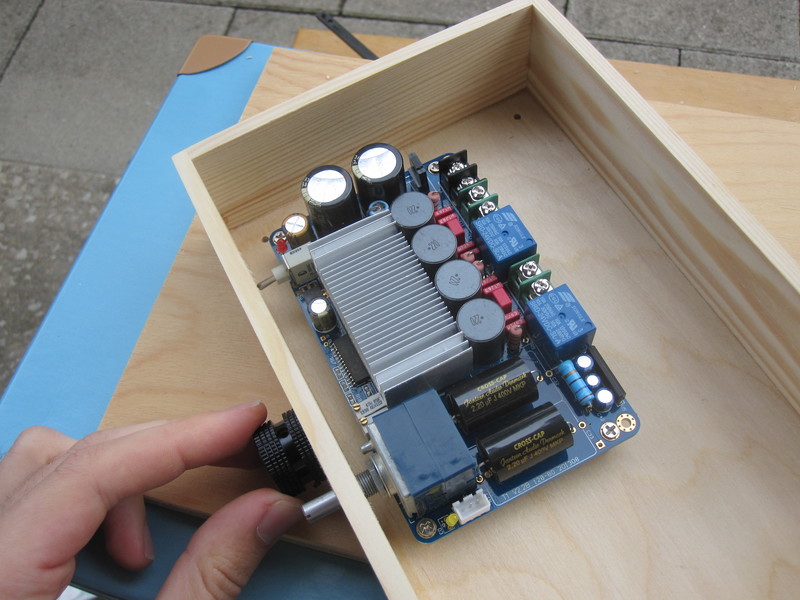

During the summer I built a wooden enclosure for the amplifier board I bought from HiFimeDIY. I will show the result rightaway and then go through the steps building it.

Design

I like the look of unpainted wooden cases and furniture in general. I also like electric appliances with visible screws to show that they are intended to be opened, serviced and hacked on. To keep the case together I opted to use metal standoffs between the top and bottom, as it would allow me to take the case apart repeatedly without damaging the wood like wood screws would. To lift it off the ground I used rubber feet that were screwed in place, reusing the screws for the standoffs.

Finally I would like to take this opportunity to make a political statement on screw heads. To clearly show that the screws on this case are intended to be turned more than once without breaking I used hexobular/Torx heads for all visible screws. As they can be hard to find in all sizes and lengths in tool stores I did cheat a bit and use more common Philips screws internally. All screws are M3 size for simplicity.

Tools

This is basically my first woodworking project so I didn't have a lot of tools suitable for processing wood before I started. Since I wasn't sure whether I would like it I didn't want to spend too much on tools. But on the other hand I didn't want to waste money on too crappy tools that would fall apart while I was using them or just after the completion of this project, in case I did want to do more woodworking projects. For this reason I chose to use hand tools only, since research and experience from other non-woodworking projects tell me that good power tools will typically cost a lot unless they can be found second-hand.

I settled for the Clas Ohlson COCRAFT series for most of my tools. I have found that they provide good value in general. The exception is the sharp tools (knife, drill bits) were I spent a little more to get quality hardware. These were the ones I ended up using:

- Jigsaw

- Hand cranked drill. I bought slightly nicer Bosch wood drill bits.

- Two small clamps

- Morakniv crafting knife

- Set of small metal files

Materials

I found these nice small wooden boxes for not too much money, of which I used the largest 15x23 cm box in this project.

As the lid I got a small plywood board for free from a carpentry store waste pile.

I started by marking and sawing out a suitable sized board using a hand jigsaw.

I used too course a sawblade initially, which caused some chip-out on the back. Fortunately blades with several different teeth densities came with the saw.

I then lined up the box and lid and drilled holes for the four standoffs. To precisely mark the holes near the corners I marked the hole on a post-it note that I could more easily line up in the corner. Obviously, if I made the case from scratch I would instead drill all the holes before assembly.

|

Next I drilled the larger holes on the front panel for the on/off switch and the volume potentiometer shaft. To prevent chip-out I clamped the box to a sacrificial plywood board.

|

I opted to not put any blinkenlights in the front panel. As I wrote in the first impressions article the output relays switch on a few seconds after power on which produces a clicking sound. This will do for a power on & amplifier happiness indicator.

|

The front panel holes were off by a millimeter or so, which I was able to carve and file out. I also had to make a smaller hole for the pin next to the potentiometer shaft that I believe is intended to secure the potentiometer against a panel.

|

With the amplifier board in its intended position I marked the shape of its standoffs on the bottom board. I was then able to mark, tap and drill the four holes for the board.

|

|

At this point I took a break for the day and will also do so in this article series. Next up is the back panel with the connectors.

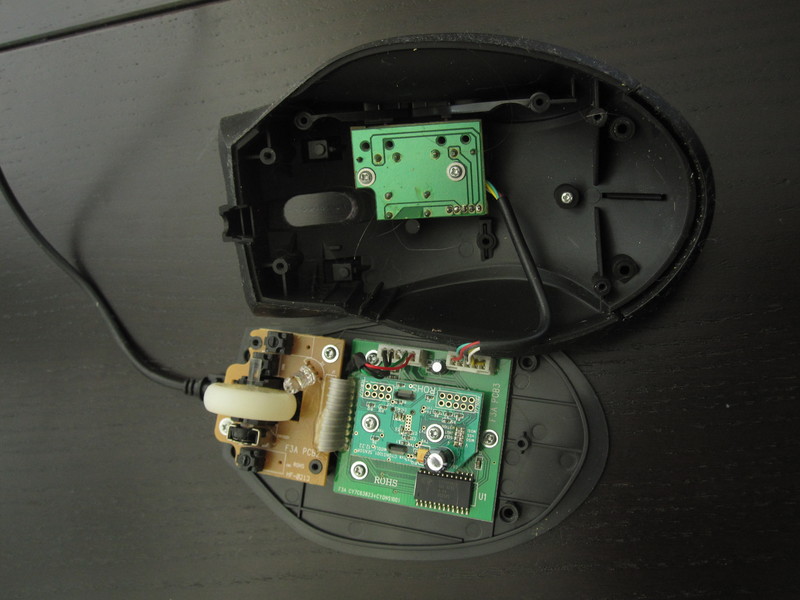

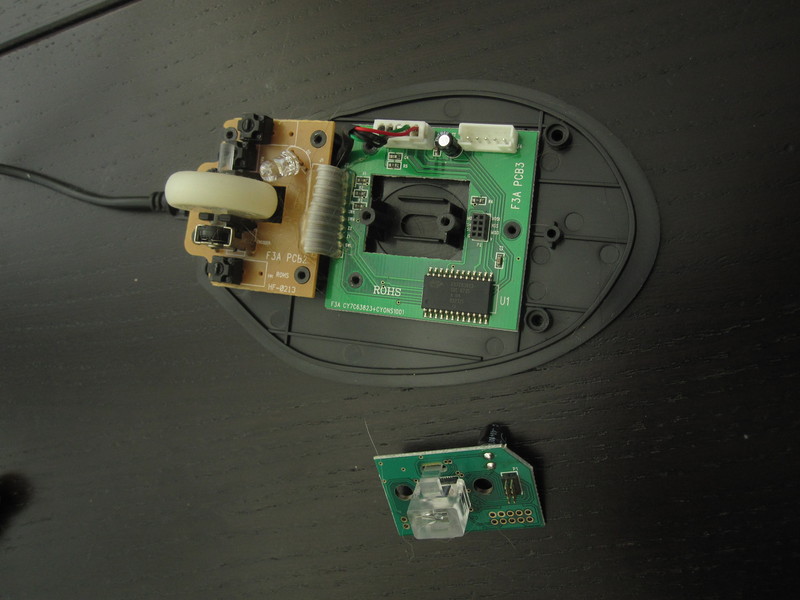

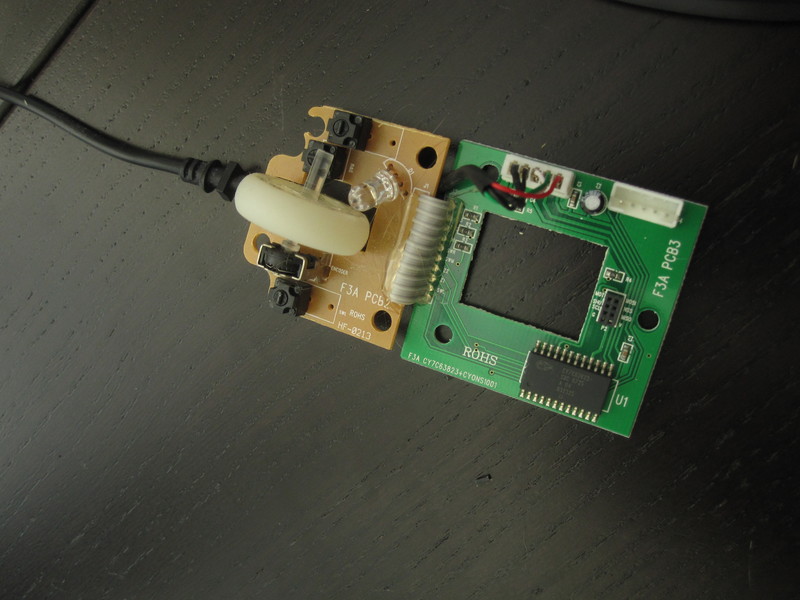

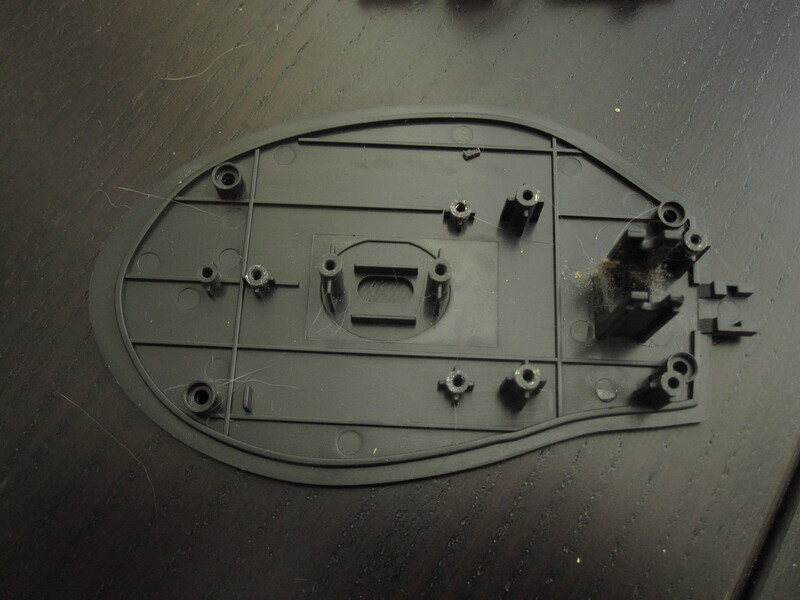

I have Mionix gaming mouse that I bought in 2008 or 2009 and have been very happy with since. As any electronic device that has been in intimate contact with humans would, it has acquired a fair amount of filth over the years. Some of the grime has also worked its way into the scroll wheel which has started to act up. Recently I decided to do something about it and took it apart to try to clean it properly.

I will say right now that I didn't entirely succeed in this quest. While the mouse did get a lot cleaner, the scroll wheel still doesn't work properly and there are some corners in the plastic that I didn't manage to clean properly. The mouse is currently relegated to a junk box in the storage closet until I make another attempt at fixing it up or, more likely, throw it away. So this article mostly serves as a teardown guide.

Disassembly

This section is best explained in pictures.

|

|

|

After the top printed circuit board has been removed the side buttons can be removed from the case. I had to bend these quite a bit to get them out, but I found that they are very flexible and can take some force without breaking.

The top cover consists of two parts. A single screw holds them together.

|

|

|

|

Cleaning

As a first attempt I tried to simply scrub the case parts clean with a dishwashing brush and detergent. The dust washed off easily enough but this did nothing for the grime that made the plastic sticky. I let it soak overnight but this didn't do much for it either.

What finally worked somewhat was to scrub the parts really hard with an abrasive cleaning sponge. I used the lightly abrasive white surface, not the heavier green kind as I was afraid to scratch the plastic, although that might have worked better in hindsight.

I also cleaned the buttons and the scrollwheel, but this didn't turn out to have any effect on the function of the scrollwheel.

|

I wasn't able to remove all the old adhesive, which is what has attracted dust in this picture. Further, while the top side ended up fairly clean the ends of the case parts were still sticky afterwards.

|

|

|

Conclusion

While it looks a lot better now, I'm still hesitant to use it. The scroll wheel is still jerky and parts of the case are still sticky. Even if it had turned out basically good as new, I'm not sure I would redo the process. It was several hours of pretty gross work.

I suspect that at least some of the grime was actually the surface of the plastic that had decomposed after years in the sun, since my desk was previously next to a western facing window. Although I hate to throw tech away that mostly still works I did get a good seven or eight years of heavy use out of this ~600 SEK (~60 EUR, ~80 USD) mouse. I recently moved and in my new flat my desk no longer has view of a window so perhaps my new mouse will last even longer.

To expedite the development of my contemplated Xbox rsync file server Linux distribution, I would like the ability to mount the root filesystem over the network using NFS. The kernel requirements for is documented in Documentation/filesystems/nfs/nfsroot.txt in the kernel source tree. nfsroot was slightly less featureful in 2.4 than in the current kernel, so make sure to get the old version if you are coding along. Also, if you are coding along then please do get in touch so I will know that I'm not the only person in the world to program for the classic Xbox in 2016.

Continuing from Booting X-DSL with a custom kernel where we disabled devfsd via kernel config patch, a suitable linuxboot.cfg for nfsroot looks something like this:

title XDSL unstable 2.4.32 NFS root

kernel KNOPPIX/bzImage.32

append root=/dev/nfs nfsroot=/srv/xbox-linux ip=192.168.1.188:192.168.1.21:::::off init=/etc/init rw video=xbox:640x480 kbd-reset

Note that no initrd should be necessary. 192.168.1.188 is the client (Xbox) IP address and 192.168.1.21 is the NFS server IP address. I could have used more DHCP (the kernel built in this article supports it) but then I would also have to reconfigure my DHCP server, and that seemed like a lot of work for this toy project.

Start by opening fs/nfs/nfsroot.c and replacing

#undef NFSROOT_DEBUG

with

#define NFSROOT_DEBUG

to get some feedback from the NFS mounting process. Otherwise you will just get the uninformative message of

VFS: Cannot open root device "nfs" or 00:ff

Please append a correct "root=" boot option

Kernel panic: VFS: Unable to mount root fs on 00:ff

which tells you that /dev/nfs, which was specified in the root kernel command line parameter, was not set up correctly.

KCONFIG time

I will present several patches in this section. To keep track of them I recommend using the program quilt as I wrote about in a separate article.

Referring to Documentation/filesystems/nfs/nfsroot.txt, the first thing to enable is NFS support and IP autoconfiguration. Apply enable-nfsroot.patch, make bzImage, transfer the kernel image and boot.

This should fail because there are no network adapters available. The reason for this is that the Ethernet driver is built as a module instead of being included inside the kernel image. For a special purpose Linux distribution like X-DSL or Xebian that will basically never be run on anything other than an actual Xbox, there is no gain in having essential drivers in modules.

Apply enable-builtin-nforce-driver.patch and try again. Now NFS will actually fire up and try to mount the root filesystem. There is confusion between the client and server however, resulting in errors being printed in the kernel console. Examining the situation closer using Wireshark revealed that the ancient Linux 2.4 kernel tries to use NFSv2 instead of version 3 or 4 which are more common today. The NFS server on my desktop computer (Linux 4.4) claims to still support NFSv2, but it doesn't seem to work very well. If the client requests a non-existing file, the server appears to respond with an "unsupported operation" which seems strange to me.

Fortunately I won't have to downgrade my desktop to Linux 2.4, since the kernel does support NFSv3, it's just not the default (yet). To enable support for it, apply enable-nfsv3.patch and rebuild. Also add v3 as an NFS mounting option in linuxboot.cfg like so:

append root=/dev/nfs nfsroot=/srv/xbox-linux,v3 ip=...

If you are using quilt, your series file should at this point look like this:

disable-devfs.patch

enable-nfsroot.patch

enable-builtin-nforce-driver.patch

enable-nfsv3.patch

(Partial) Success

Finally, the root filesystem is mounted and the system boots! Almost... It fails during fsck because the device backing the root filesystem (normally /dev/loop0) is not found. Disabling fsck in /etc/fstab fixes this and allows the boot procedure to continue and eventually land in a proper root shell.

However, the system will not shut down cleanly. At this part it becomes clear that DSL is maybe not a very solid foundation to build a specialized operating system on. The major part of X-DSL's shutdown process is implemented by a gigantic pile of shellscript in /etc/init.d/knoppix-halt. As part of this procedure, the network interfaces are disabled before the filesystems are unmounted. It should in theory unmount network filesystems first but, being a gigantic pile of shellscript, it understandably isn't completely reliable. I guess this is what they call a "minimalistic init system".

I could try to reorder lines in this file, but that would create a maintenance burden that I'm not very interested in. I might come back to this later, but first I should investigate Xebian, a Debian port for the classic Xbox. The reason I tried X-DSL before Xebian is that it seemed to have been maintained for longer. Xebian basically had one major release with some minor updates before its developers moved on. Still, being based on Debian I expect it to be fairly versatile and solid.

I will skip right to the point: The magic command for creating an ISO9660 image for a disc that is bootable both on a PC BIOS and on a modded Xbox goes something like this:

$ mkisofs -udf -r -joliet -eltorito-catalog isolinux/boot.cat -boot-info-table -eltorito-boot isolinux/isolinux.bin -no-emul-boot -boot-load-size 4 -o basic.iso basic/

Explanations follow

-udfcreates a UDF filesystem in addition to the standard ISO9660. Apparently this is required for the Xbox to recognize the disc as bootable.-renables the creation of Rock Ridge file metadata. This allows for longer filenames, permissions and other improvements in POSIX systems. Probably used once the Linux kernel is booted from the disc. Unlike the-rockparameter,-roverrides some of the metadata with default values that make more sense for removable media.-jolietenables the creation of Joliet file metadata. Same purpose as Rock Ridge but for Windows based systems. Not sure if strictly required here (but it seems related to UDF according to genisoimage's man page).-eltorito-catalogspecifies the location of the "boot catalog" that is supposedly ignored by everything but is still required according to the spec. Note that isolinux/boot.cat specifies a file to be created inside the image file. It does not (need to) exist in the source directory basic/.-eltorito-bootspecifies a boot image to be embedded in the image. This is the path to an existing file inside the CD root directory specified last on the command line. The boot image is an executable file to be run without an operating system, typically a boot loader. As I understand it this is comparable to the code inside the MBR of a hard drive.-boot-info-tablespecifies that the "partition table" inside the boot image should indeed be updated with the actual CD layout before embedding it in the image file. Note that this makes permanent changes to the source file.-no-emul-bootspecifies that the boot image should be run "as is". Default is to emulate a floppy drive, in which case the boot image must be an actual floppy image.-boot-load-sizespecifies the size of the boot image in fake "sectors" (512 byte). This should typically be the size of a CD sector, which is 2048 bytes in "mode 1". If this is smaller than the boot image (as in this case) it is truncated, which ISOLINUX is designed to handle. If unspecified the entire image is embedded.- Finally

-ospecifies the filename to write and basic/ is the directory which will become the CD root directory in the image.

Further details

The ISO9660 format, due to being very old, is fairly limited in its vanilla shape and has therefore been extended several times during the years. That's why there are now four ways of encoding the filename and other metadata on a CD (ISO9660, Rock Ridge Interchange Protocol, Joliet and UDF).

Vanilla ISO9660 supports filenames of up to 31 characters, and the allowed list of characters is short. But for compatibility with DOS, typically only 8+3 characters are allowed in implementations (like genisoimage/mkisofs). -l unlocks the full length of 31 chars, but I'm not sure if this is supported by the Xbox BIOSes.

As eluded to earlier, -r overrides some of the file metadata with sensible values. Examples are squashing uid/gid to 0 (since the user who inserted the disc is usually treated as the "owner" of its contents), removing write permissions for everyone (read-only media), and allowing read access to both user/group/others (CD's are not really multi-user systems).

El Torito is the name of the ISO9660 extension for bootable optical media. This is used by all PC's in BIOS mode. (U)EFI was just a baby when the Xbox was new, works differently and is outside the scope of this article.

The Xbox BIOS does not consider El Torito however. It simply looks for a default.xbe file and executes it if found (and the disc is valid, unless those checks have been defeated like in a modded Xbox). The gotcha is that the Xbox original BIOS (and hacked versions thereof) only supports UDF, none of the other file metadata formats. It makes some sense since games are normally only stored on DVD's, and DVD's typically (but not always) use UDF.

The free BIOS Cromwell however, does support one or more of the other formats (I haven't checked which). And as you may know it does not work with XBE's at all, instead Cromwell is typically used to directly load a Linux kernel as specified in the linuxboot.cfg text file.

Test case

This was tested using Ed's Xebian 1.1.4 basic edition, available from the Xbox-Linux Sourceforge project page.

References

See messages 20040714-Re dual boot iso-4805.eml and 20040714-Re dual boot iso-4807.eml on the xbox-linux-user mailing list.